- 1 Post

- 42 Comments

I’m not the OP, but you can get 8TiB SSDs, they are spendy, but doable, no spinning disks required, the benefit of using a nas based solution is you can put a bunch of cheap SSDs in

Not directly an answer, but the CRT guy has a series of industrial computers for different environments, which could provide inspiration.

Some of them have direct DC inputs, some have anti-vibration designs, some have massive passive cooling!

The little guys series https://www.youtube.com/watch?v=EP3aKEG79DM&list=PLec1d3OBbZ8LGjvbb0GQwlQxWXmI2PA88

I think a Synology box would work for you, or a TrueNas design - you could just build out one of their motherboards in your own itx case. These are good, robust, anti-vibration, mobile low power cpus, hardware selected for robustness and minimum heat. Stick it in a cupboard and forget about it, they run containers, and vms.

Depending on your requirements, you can pick up used gear for quite cheap, set alerts on craigslist/marketplace/kijiji. i.e. one access point for like $30 used, and host your own network controller container to configure it.

If you want a single pane of glass whole network management, its going to be spendy no matter which ecosystem you go with.

Fair enough, can’t go wrong with Ubiquiti, Mikrotik, Grandstream for radios.

True, but you can use your gateway to cut off google wifi from google, and still use the radios. No need to buy new hardware.

Heck, you can put openwrt on some google wifi models https://openwrt.org/toh/google/wifi

My advice stays the same, work with what you have first, save your budget, then SLOWLY, after doing research, buy one thing, and fit it in.

Your advice is good if you just want the fastest way to de-google yourself, but i think the OP wants to run a homelab, and learn, and understand.

Do one thing at a time, don’t buy equipment unless you have a actionable use case for it.

Isp cpe in bridge mode

One of the boxes can be your gateway

You can keep using the Google Wi-Fi.

You can play around with proxmox, xen, etc, to run a bunch of containers, or virtual machines, to do different things on your network. I think you can do it all with your current hardware

Ipv6

Depending on your gateway, you may be able to override the DNS settings for a few domains that you use internally

4·15 days ago

4·15 days agoIpmi interface?

Wow. Where is all this hate coming from?

People like to experiment, and tinker, and try things in their home lab, that would scale up in a business. Just to prove they can do it. That’s innovation. We should celebrate it. Not quash people

Okay. Do you want to debug your situation?

What’s the operating system of the host? What’s the hardware in the host?

What’s the operating system in the client? What’s the hardware in the client?

What does the network look like between the two? Including every piece of cable, and switch?

Do you get sufficient experience if you’re just streaming a single monitor instead of multiple monitors?

Remember the original poster here, was talking about running their own self-hosted GPU VM. So they’re not paying anybody else for the privilege of using their hardware

I personally stream with moonlight on my own network. Have no issues it’s just like being on the computer from my perspective.

If it doesn’t work for you Fair enough, but it can work for other people, and I think the original posters idea makes sense. They should absolutely run a GPU VM cluster, and have fun with it and it would be totally usable

Fair enough. If you know it doesn’t work for your use case that’s fine.

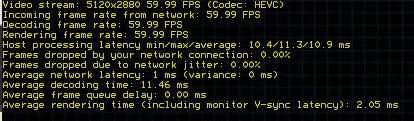

As demonstrated elsewhere in this discussion, GPU HEVC encoding only requires 10ms of extra latency, then it can transit over fiber optic networking at very low latency.

Many GPUs have HEVC decoders on board., including cell phones. Most newer Intel and AMD CPUs actually have an HEVC decoder pipeline as well.

I don’t think anybody’s saying a self-hosted GPU VM is for everybody, but it does make sense for a lot of use cases. And that’s where I think our schism is coming from.

As far as the $2,000 transducer to fiber… it’s doing the same exact thing, just more specialized equipment maybe a little bit lower latency.

Can you define what acceptable latency would be?

local network ping (like corporate networks) 1-2ms

Encoding and decoding delay 10-15ms

So about ~20ms of latency

Real world example

Fiber isn’t some exotic never seen technology, its everywhere nowadays.

Moonlight literally does what you want, today! using hvec encoding straight in the gpu.

Try it out on your own network now.

Yes, for some definition of ‘low latency’.

Geforce now, shadow.tech, luna, all demonstrate this is done at scale every day.

Do your own VM hosting in your own datacenter and you can knock off 10-30ms of latency.

However you define low latency there is a way to iteratively approach it with different costs. As technology marches on, more and more use cases are going to be ‘good enough’ for virtualization.

Quite frankly, if you have a all optical network being 1m away or 30km away doesn’t matter.

Just so we are clear, local isn’t always the clear winner, there are limits on how much power, cooling, noise, storage, and size that people find acceptable for their work environment. So there is some tradeoff function every application takes into account of all local vs distributed.

100% ^^^ This.

You could do everything with openstack, and it would be a great learning experience, but expect to dedicate about 30% of your life to running and managing openstack. When it just works, it’s great… when it doesn’t… ohh boy, its like a CRPG which will unlock your hardware after you finish the adventure.

11·16 days ago

11·16 days agoThis is a terrible idea, no really.

Any system that shares power and grounds (i.e. on the same bus), keep on the same power supply/domain.

Even, if!!!, it doesn’t fry your computer when one power system goes off but the other stays on - the system will absolutely not be stable, and will behave in unexpected ways.

DO NOT DO THIS.

4·22 days ago

4·22 days agoGood article, nice use of data.

The device you’re thinking of has 42 decibels of sound. You should be aware of that, I don’t think it’s actually fanless

Noise is going to be a huge factor for your home lab, so make sure you look at the data sheet for whatever you’re about to buy and check what it’s rated noise level is